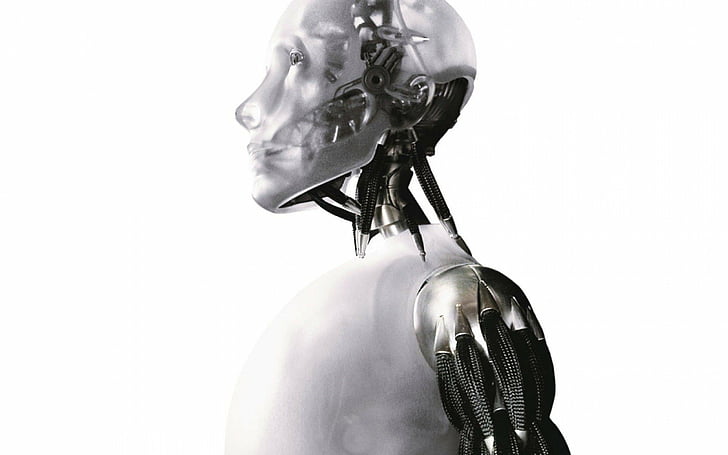

Is your AI feeding?

Let's bust the myth of the AI evolving very fast!

There is no doubt that AI has come a long way since its inception. From simple rule-based systems to advanced ML algorithms, AI has made significant strides in understanding and processing data.

However, when it comes to complex problem solving, AI still falls short. Why?

In this blog post, I will explore one of the issues I've come across while using the Gen AI, be it Gemini, Chat GPT, Claude or Grok.

The Rise of Gen AI

I remember the Deep Blue computer. I was really small and learning to play Chess when I got to know that some years ago, there existed a chess computer which defeated the then world champion Garry Kasparov. Later in my teens when YouTube exploded, I even saw the gameplay. But little did I know this is the start of something really fascinating thing for me in the future. Gen AI represents the pinnacle of AI development, where machines can perform tasks that were once exclusive to humans.

Over the years, AI has made tremendous progress in various domains. From speech recognition to image classification, AI algorithms have become increasingly accurate and efficient. Companies have started integrating AI into their products and services, revolutionizing industries such as healthcare, finance, and transportation.

The Limitations of Complex Problem Solving

Deep Blue was a monumental feat, but now I know it could only excel within the highly defined rules and predictable environment of chess. This AI will definitely struggle with messy, open-ended problems that require real-world understanding and nuance.

While AI has made significant advancements, it still struggles when it comes to solving complex problems. Complex problems require a deep understanding of context, the ability to reason and think critically, and the capacity to handle ambiguity. These are areas where AI falls short.

Do check out this hilarious interaction between a Twitter user and Grok, and you will understand where I'm coming from!

One of the primary reasons for AI's limitations in complex problem solving is the lack of human-like intuition. Humans can draw upon their past experiences, knowledge, and emotions to tackle complex problems. AI, on the other hand, relies on predefined algorithms and data patterns to make decisions. It lacks the ability to think creatively and adapt to new situations. So to say, it doesn't really feed on data as easily as we expect.

Another challenge for AI in complex problem solving is its processing power. Ok, I get it, it's faster than ever. But does raw processing translate to true comprehension? I don't think so. I've tried some complex linear equation problems with Chat GPT and Bard, and with each leg I try to make it understand how it will solve, it gets confused and completely fall flat. They're nowhere near the human brain in understanding the context.

At Numbreylla, we rigorously try to implement AI into our business processes so as to streamline our operations. We have been quite successful, however the problem lies in updation. Each time we have to update it's Knowledge Base and that sometimes creates a gap in the accurate flow of information. An AI should be evolving with time by itself instead of having to feed it at the source. Its reactions are based on patterns it's identified in data, not a true grasp of the situation.